Introduction

Probably the first serious effort to build computing machines was made by the Greeks, who built analogue and digital computing devices that used intricate gear systems, although they were subsequently abandoned as being impractical. The first practical "computers" were accounting systems used by the Incas that employed ropes and pulleys. Knots in the ropes represented the binary digits. They used these systems for tax and government records, and stored information about all of the resources of the Inca Empire, allowing efficient allocation of resources in response to disasters such as storms, drought, and earthquakes. All but one of these systems was destroyed by Spanish soldiers acting on the instructions of Catholic priests, who believed that they were the work of the Devil (a view probably still applied by some to the computers in use today).

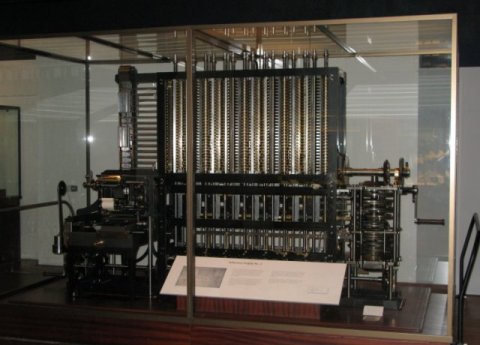

In the 1800s, Charles Babbage invented a programmable device for controlling weaving machines. These devices used Hollerinth (punch) cards for data storage. The cards contained the control codes for the various patterns. In 1822 Babbage presented plans for a machine he called the "Difference Engine" to the Royal Society. It was intended for the calculation of astronomical and mathematical tables, and he received the society's approval and a grant to build the device. Unfortunately, due to various problems, the work was never completed.

Babbage meanwhile turned his attention to the design of a more general-purpose calculating machine, which he dubbed the "Analytical Engine". Difficulties in obtaining funding meant that only a few test components were ever actually built. Babbage continued to work on the design, however, and with the collaboration of long-standing friend Augusta Ada King, Countess of Lovelace, wrote a series of documents describing the operation of the device and formulating the algorithms it would employ. The Countess was considered by some to be the first computer programmer, and the Ada programming language is named after her. In 1991, a difference engine was constructed using Babbage's original drawings, and worked perfectly.

The Babbage Difference Engine (constructed 1991), London Science Museum

In the 1900s, researchers started to experiment with both analogue and digital computers using vacuum tubes. Some of the most successful early computers were analogue computers capable of performing advanced calculus problems. The real future of computing was to be digital, however, and digital computer technology owes much to the technology and mathematical principles used in telephone and telegraph switching networks.

During the 1930s, Konrad Zuse, a construction engineer for the Henschel Aircraft Company in Germany, produced several automatic calculators to assist him with engineering calculations. In 1936, he created a mechanical calculator called the Z1, which is now generally considered to be the first binary computer. In 1939, he produced the Z2, the first electro-mechanical computer. The Z3 followed in 1941, and embodied most of the architectural features later described by John Von Neumann, with the notable exception that only the data being worked on was held in memory. The programs were stored on celluloid film of the type used for early movies.

At around the same time (1939 – 1942), John Atanasoff and Clifford Berry were building an electronic digital computer (thought to be first such computer in the world) at Iowa State University. The computer introduced several innovations, including the use of binary arithmetic and the separation of memory from computing functions.

One of the first practical applications for digital computers was the generation of artillery tables for the British and US military. The first digital computer used for such a purpose, called the MARK 1, weighed five tons and was developed at Harvard University by Howard Aiken and Grace Hopper. It was completed in 1944 and was used by the US Navy for gunnery and ballistic calculations. The computer employed a large number of electro-mechanical relays, was capable of a range of mathematical calculations including addition, subtraction, multiplication, and division, and could retrieve the stored results of previous calculations for use in new calculations.

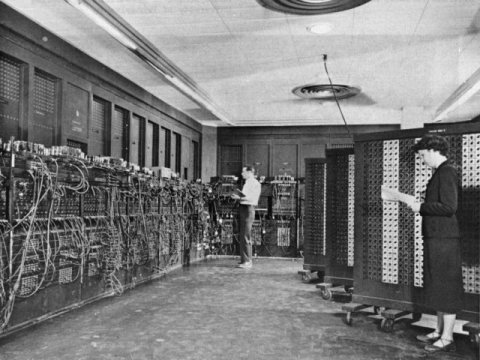

Another computer intended for military use, developed by John Mauchly and J Presper Eckert in 1946, was the Electrical Numerical Integrator And Calculator (ENIAC). Although the war with Japan was by that time over, ENIAC (which weighed thirty tons) was put to work on a number of other applications, including the development of the hydrogen bomb.

ENIAC in Ballistic Research Laboratory building 328 (U.S. Army photo)

In 1948, Eckert and Mauchly started their own company, and in 1949 and launched the BINary Automatic (BINAC) computer, which used magnetic tape as a data storage medium. In 1950, the company was taken over by Remington Rand, and further research resulted in the UNIVersal Automatic Computer (UNIVAC). The first customer to take delivery of a UNIVAC computer was the US Census Bureau in 1951, and Remington Rand became the first US manufacturers of a commercial computer system. UNIVAC also had the effect of making Remington Rand a direct competitor to IBM in the computer equipment market.

At around the time Eckert and Mauchly were developing ENIAC, Sir Frederick Williams and Tom Kilburn were developing a technique for storing data using cathode-ray tubes as an early form of random access memory (RAM). This technique was in widespread use for several years until it was rendered obsolete by the use of ferrite core memory in the mid 1950s. The first computer to employ a stored program using the technique was the "Manchester Baby" developed at Manchester University by Kilburn and Geoff Tootill.

The invention of the transistor by John Bardeen, Walter Brattain, and William Shockley at Bell Labs in 1947 has been called one of the most significant developments of the 20th century, and certainly revolutionised the development of the computer. The first digital computers used vacuum tubes to implement logic circuits. These devices were bulky, consumed significant amounts of power, created a lot of heat, and had to be frequently replaced.

The replacement of vacuum tubes with transistors enabled computers to become several orders of magnitude smaller, cheaper, and more power-efficient. The first commercially-produced transistorised computer was IBM’s 7090 mainframe computer, which appeared in 1960 and was the fastest computer in the world at that time. IBM subsequently dominated the mainframe and minicomputer market for the next twenty years.

A replica of the first working transistor

By the late 1950s, both Texas Instruments and the Fairchild Semiconductor Corporation had independently developed the technology to place large numbers of circuit components, and the circuitry required to connect them together, onto a single silicon or germanium crystal. The resulting integrated circuit (IC), sometimes called a chip, provided the means to produce much smaller computers, at a much lower cost than was previously possible.

The first commercially available ICs appeared in 1961, marketed by Fairchild Semiconductor. From that point forward, all computers were manufactured using ICs rather than discrete components. ICs also appeared in the first portable electronic calculators. By 1970, the first memory chips were available. The newly-formed Intel company released its 1103 1 kilobyte dynamic RAM (DRAM) memory chip, which became the best-selling semiconductor memory chip worldwide within two years, while Fairchild Semiconductor produced the first 256 kilobyte static RAM (SRAM) memory chip.

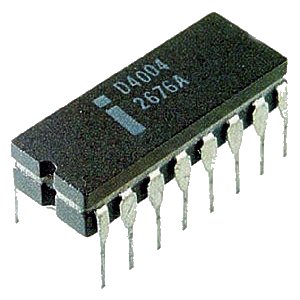

By the early 1970s, it had become possible to create very small ICs that contained very large numbers of transistors and other components, and in 1971 Intel produced the world’s first single-chip, general-purpose microprocessor, the Intel 4004. The new chip contained 2,300 transistors, and the same processing power as the 30-ton ENIAC, despite having approximate dimensions of only 3 x 4 mm.

The chip subsequently proved successful in a broad range of applications, and despite being incomparably faster and more sophisticated, today’s 64-bit microprocessors are based on the same techniques and principles. At this time, the microprocessor is still the most complex mass-produced artefact man has ever conceived of.

The Intel D4004 microprocessor

In 1971, IBM introduced the memory disk (better known today as the floppy disk). It consisted of an eight-inch diameter flexible plastic disk coated with magnetised iron oxide, and held 110 kilobytes of data. Data is stored on the surface of a magnetic disk as microscopically small areas that hold different patterns of magnetisation, depending on whether the bit being stored is a one or a zero. The chief advantage of the floppy disk was the fact that it was highly portable, could be used to store relatively large amounts of data, and was extremely useful for transferring data from one location to another.

The size of the disks got smaller as the data storage capacity increased. By the time IBM brought out their PC in 1981, the size of a floppy disk had decreased to 5.25 inches in diameter, while the amount of data that could be stored had risen to up to 1.2 megabytes (MB). The first 3.5 inch floppy was produced by Sony in 1981, with an initial storage capacity of 400 kilobytes. This later rose to 720 kilobytes, and then 1.44 megabytes. Today, floppy drives have largely disappeared, and have been replaced by high-capacity optical media (CD-ROM and DVD-ROM) and USB flash drives.

A 5.25" floppy disk

By the early 1970s, interest was growing in the concept of the microcomputer (or "personal computer"). Between 1974 and 1977, several manufacturers marketed computers fit this general description, most in kit form. During this time a company called MITS (Micro Instrumentation Telemetry Systems), who were in the calculator business, were experiencing severe difficulties due to competition from Texas Instruments.

Forced to seek an alternative market niche, they decided to produce a personal computer in kit form. The result was the Altair 8800 (apparently the name was inspired by an episode of Star Trek), which was based on the new Intel 8080 microprocessor, had a 250 byte RAM card, and sold for $400.00.

The Altair was a huge success, and attracted the attention of programmers Bill Gates and Paul Allen (who later went on to found Microsoft). Gates and Allen wrote a version of the BASIC programming language that would run on the Altair (once the memory was increased to 4096 bytes). The Altair 8800 is considered by many to be the spark that ignited the subsequent explosion of interest in home computers.

The Altair 8800 personal computer

In 1975, Steve Wozniak and Steve Jobs started a collaboration that would produce the first Apple computer, released in April 1976, and lead to the creation of Apple Computers. The Apple I was built around the Rockwell 6502 microprocessor, had a built-in display screen and keyboard with 8 kilobytes of dynamic RAM, and sold for $666.66.

The Apple II, also based on the 6502 processor but with colour graphics and a selling price of $1298, appeared in 1977. A later (and more expensive) version increased the size of the RAM from its initial 4 kilobytes to 48 kilobytes, and replaced the cassette tape drive originally provided with a floppy disk drive.

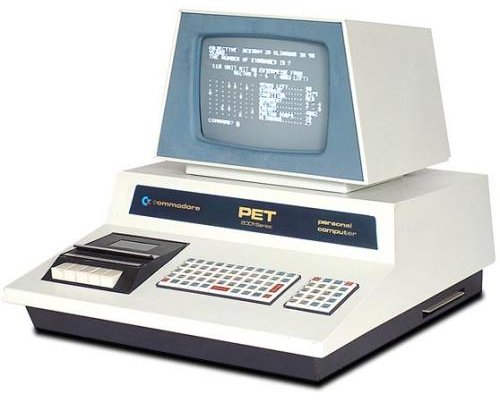

Another computer based on the 6502 microprocessor, the Commodore PET, also appeared in 1977 at a price of $795, considerably cheaper than the Apple II. It provided 4 kilobytes of RAM, built-in monochrome graphics and keyboard, and a cassette tape drive. The package included a 14k ROM-based version of BASIC written for the PET by Microsoft. The successor to the PET was the VIC-20 first seen in 1980, which was the first personal computer to sell over one million units.

The Apple II personal computer

The Commodore PET personal computer

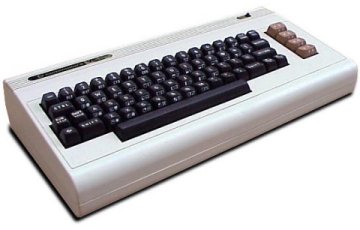

The Commodore VIC20 personal computer

1979 saw the release of VisiCalc, a spreadsheet program written for the Apple II by Dan Bricklin. The significance of VisiCalc, the first spreadsheet program written for a personal computer, was huge. Companies were quick to see the potential time savings possible in using this software for financial projections that previously had to be done manually, and for which any adjustment entailed hours of additional work. Adjustments made to a VisiCalc spreadsheet were recalculated and applied to the entire document immediately.

VisiCalc was eventually bought by the Lotus Development Corporation, where it eventually (circa 1983) evolved into the Lotus 1-2-3 spreadsheet for the PC. 1979 also saw the arrival of Wordstar, a word processing package for microcomputers developed by Micropro International, which became the most popular software application of the early 1980s.

In August 1981 IBM launched the IBM 5150 Personal Computer (PC). It was built around a 4.77 MHz Intel 8088 microprocessor, had 16 kilobytes of memory (expandable to 256 kilobytes), and either a single or two 160 kilobyte floppy disk drives. A colour monitor was optional, and prices started at $1,565.

Perhaps the most significant development was that the new computer was constructed using standard, off-the-shelf components, had an "open" architecture, and was marketed through independent distributors. Although IBM "clones" have proliferated in the intervening years, and both the speed and the capacity of the IBM PC has grown at an astonishing rate, the underlying architecture of the personal computer has remained essentially unchanged for nearly forty years.

The advent of IBM's PC also afforded an opportunity to Bill Gates, Paul Allen, and the fledgling Microsoft company to become serious players in the software industry when they were asked to provide the operating system for the new computer. The result was IBM PC-DOS (Disk Operating System), later marketed by Microsoft virtually unchanged as MS-DOS.

The IBM 5150 personal computer

In 1983, Apple released the "Lisa" computer which was aimed at businesses. It was the first personal computer to implement a graphical user interface (GUI), based on ideas first developed by the Xerox Corporation at their Paulo Alto Research Centre (PARC) in the 1970s. The package included a hierarchical file system and a suite of office applications. The user interface included virtually all of the features found on a modern desktop computer, including windows, icons, menus and a mouse.

The mouse was by no means a new device, having been first invented in 1964 by Douglas Englebart as an "X-Y position indicator for a display system", but it had not previously been paired with a personal computer. The Lisa was not a commercial success, but Apple learned some valuable lessons from the experience and in 1984 produced the Lisa 2 (subsequently renamed as the Macintosh XL). At approximately half the price of the original model, the Lisa 2 / Macintosh computer met with somewhat more commercial success, although it was never to seriously challenge the IBM PC and its clones in the business world.

The original Macintosh personal computer

Despite this, the significance of the GUI in terms of popularising personal computers was not lost on Microsoft. In November 1985 they released the first version of the Windows operating system. The new "operating system" was, in fact, little more than a desktop management application that ran on top of the real operating system, MS-DOS. It was slow and prone to errors, and triggered a legal dispute between Microsoft and Apple Computers over alleged copyright infringement. Nevertheless, the arrival of software programs written for the new Windows environment, notably a desktop publishing package called Aldus PageMaker (the first application of its kind for the PC), helped to keep Windows afloat. Realising that they still had some way to go, Microsoft set about creating an improved version of Windows.

Windows 2.0 was released in December 1987, and made Windows based computers look more like the Apple Macintosh. Although this triggered a further lawsuit from Apple, Microsoft eventually won the ensuing court case and meanwhile released Windows version 3.0 in May 1990. The new version had many improvements over the previous two and, significantly, gained broad support from third-party vendors. The proliferation of Windows-compatible software was an incentive for end users to purchase a copy of Windows 3.0, and three million copies were sold in the first year.

Version 3.1 became available in April 1992, and sold three million copies in the first two months following its release. Windows 3.1 was the dominant operating system for PCs during the next three years, only being displaced by the advent of Windows 95, which was a true operating system in the sense that it did not run over MS-DOS (although an updated version of DOS was still a major component of the operating system’s core software).

Windows 95 was highly user-friendly, and included an integrated TCP/IP protocol stack and dial-up networking software. Successive versions of the Windows desktop operating system (the current version is Windows 10) have added new features, support for new hardware, and an increasingly sophisticated range of value-added services.

The predominance of Microsoft in the desktop operating system market now looks to be under serious threat from the open source software community, with Linux now taking a significant (albeit still relatively small) share of the desktop market, and application software like Open Office being offered as a viable (and freely licensed) alternative to Microsoft’s proprietary software. Contributing to this process is the apparent willingness of hardware vendors to provide support for operating systems other than Windows, and the growing community of open source users willing to contribute their time and expertise in promoting the use of open source software.

Meanwhile the cost of computer hardware has fallen to such an extent that ownership of one or more personal computers is almost as commonplace as ownership of a television or refrigerator, while the parameters describing maximum processor speed, memory size and storage capacity are being adjusted almost daily.